“Should students be allowed to use ChatGPT in exams?” was the first question that was asked in a gentle manner. However, the discussion had veered into something far more complex by the time Stanford’s dean of education, Dan Schwartz, responded to it. It was about intent, trust, and what learning actually meant.

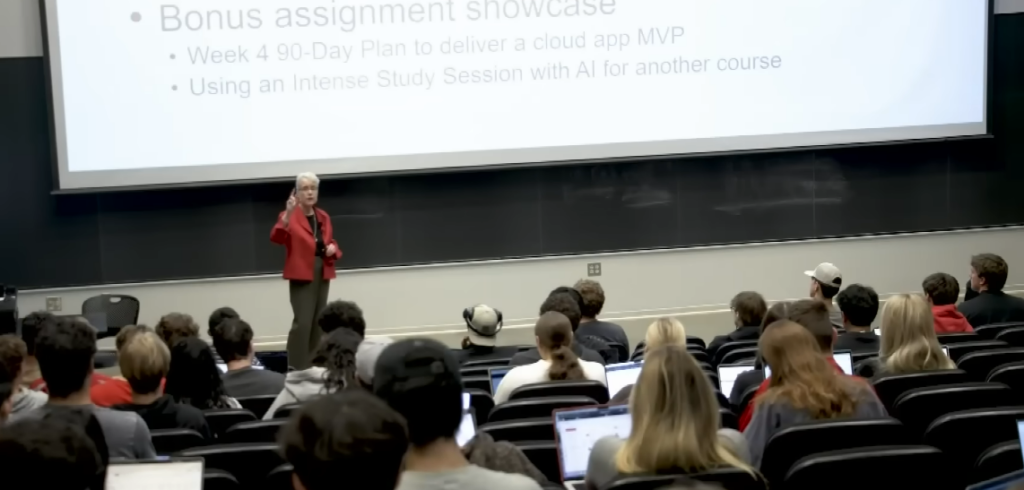

Schwartz quietly presented a vision that seemed amazingly grounded and shockingly ambitious on a college panel that was filmed. He claimed that while AI won’t take the position of academics, it will force us to reconsider our methods of instruction. Not defensive, not scared, but attentive, there was a discernible change in the room. In a classroom that is increasingly mediated by algorithms, professors—who have historically been regarded as knowledge authorities—are being asked to serve as mentors.

AI is becoming a permanent yet unexpected presence in labs and lecture halls. Students rely on it for comments on thesis drafts, essay organization, and code debugging. It is effective, sometimes excessively so. Reviewing writings that were aided by AI was like “grading a machine that aced the Turing Test but skipped the reading,” according to a professor of the humanities.

| Topic | Details |

|---|---|

| Central Question | Can AI replace college professors or only enhance their role? |

| Location | Stanford University, California |

| Dominant View at Stanford | AI is a tool to augment, not replace, human educators |

| Key Faculty Voice | Dan Schwartz, Dean of Stanford Graduate School of Education |

| Popular Student Sentiment | Mixed — intrigued by AI’s use, wary of its limits in human learning |

| Projected Classroom Model | Hybrid: AI personalization + human mentorship by 2030 |

| Major Concerns Raised | Loss of mentorship, ethical grading, data bias, and teacher redundancy |

| Future Role of Professors | Curators, mentors, adaptive thinkers—not just content transmitters |

Nevertheless, the ambiguity has energy. Some professors have created tools that offer round-the-clock support by incorporating AI into their teaching, such as rephrasing challenging ideas, producing instances, or simplifying intricate theories. The notion that students should teach the AI rather than merely query it is very novel. This phenomenon, which Schwartz dubbed the “Protégé Effect,” occurs when you explain something to someone else—a human or a machine—and it improves your own understanding. Though digitalized, the concept is remarkably similar to Socratic approaches.

I recently attended a presentation where students contrasted two different types of lesson plans: one created by humans and one by artificial intelligence. The AI strategy was clear-cut, succinct, and pedagogically sound. However, it was heartless. There were peculiarities, cultural allusions, and an unplanned scene where a joke went awry yet caused a real laugh in the student’s rendition. The lesson was overshadowed by that laugh. I was reminded of why we appreciate human learning’s flaws.

The question of where this leads is not unique to Stanford. But it may be in the best position to respond. Just blocks away from where these discussions are taking place, many of the AI tools that are currently revolutionizing education were developed. Nonetheless, the leadership appears to be risk-aware. Schwartz cautioned that if AI is used carelessly, it may cause universities to go from being hubs of growth to being systems of optimization, producing work more quickly and with less critical thought.

He’s got a point. The distinction between a platform that assesses and a professor who mentors is subtle but important. One encourages interest. Correctness is confirmed by the other.

However, the change that is taking place doesn’t have to be a sign of surrender. AI has the potential to serve as an intellectual mirror for pupils, reflecting their blind spots, evaluating their arguments, and offering fresh ideas. It might free up instructors’ time from mundane duties like scheduling, formatting, and grading so they can concentrate more on discussions, inquiries, and those intangible moments of revelation.

It’s interesting to note that some classes now demand that homework contain the students’ AI prompts. It has nothing to do with surveillance. The goal is to make the process visible. Teachers can gain a better understanding of students’ thought processes by observing how they engage with AI. Schwartz pointed out that this makes learning very transparent.

Not everybody is prepared. According to a lecturer in computer science, dilution poses a greater hazard than replacement. “If we’re not careful, we’ll start designing education that’s rich for human exploration and easy for AI to assess,” she said. Her worry wasn’t hypothetical. It happened after it became apparent that student entries were becoming more and more alike—well-written but uncannily lifeless.

However, this is also the reason Stanford’s statement seems so relatable. The university is bending AI rather than outright prohibiting it. The swarm of automation is being transformed into something surprisingly collaborative through student-led creation, creative projects, and selective use.

The faculty aspires to redefine what effective teaching looks like by carefully utilizing AI. not only imparting information but also encouraging introspection. fostering intricacy rather than merely imparting facts. In this way, AI may enhance rather than undermine the job of the professor.

“Will it be used?” has given way to “How should it be used?” in the last year when it comes to AI in the classroom. And I see hope in that reinterpretation. AI is capable of producing decent papers. It is capable of resolving issues. However, it is still unable to raise an eyebrow in response to a student’s presumptions or establish credibility with a well-timed, silent pause. The professor is still in charge of that.

Biometric grading and holographic lecturers are not part of Stanford’s 2030 goal. It’s more subtle—combining technology with purpose. The university is creating a foundation for something much more robust by accepting AI as an ally rather than a substitute.